AI Agents: Revolutionizing Artificial Intelligence

In this article, we will delve deep into the world of AI agents, exploring their foundations, their architecture, and the various building blocks that compose them. We will also look at how they can be integrated into different fields, the benefits they bring, and why these technologies are attracting growing interest in businesses and among the general public.

Series of articles on AI

Here is the first article in a four-part series:

- LLMs: understanding what they are and how they work (current article).

- NLP: an exploration of natural language processing.

- AI Agents: a look at autonomous artificial intelligences.

- Comparison and positioning of AI Smarttalk: a summary and perspective.

Introduction

In recent years, artificial intelligence (AI) has gained increasing popularity, fueled notably by the democratization of powerful natural language processing (NLP) models and large language models (LLMs). Nowadays, these technologies go beyond mere text generation or auto-completion: they give rise to more complex, more autonomous systems capable of acting and interacting on behalf of the user. These systems—commonly referred to as AI agents—are designed to handle all sorts of tasks, from simply answering frequent questions to managing an entire complex process.

But what do we really mean by AI agent? What are the technological components that make it up? How does an AI agent manage to understand requests, reason, and make decisions? To answer these questions, we will first define what an AI agent is and then look into how its perception and decision engines interact. We will also examine the key role played by knowledge retrieval (or Knowledge Base) and the usefulness of calling upon tools (the Tool Call) to carry out specific actions. Finally, we will see how memory helps maintain context and improve the relevance of interactions over time.

What Is an AI Agent?

An AI agent is a software program capable of making decisions and performing actions (or, more simply, providing answers) in an autonomous manner, relying on artificial intelligence methods. The agent is generally designed to converse with a user (via text or voice) and to carry out specific tasks by using external resources, knowledge bases, or various tools.

These agents rely on natural language processing (NLP) to understand requests and to communicate clearly. But if we limit ourselves to traditional NLP approaches, we quickly run into constraints: a conventional chatbot has a restricted vocabulary and a relatively rigid behavior. That is why large language models (LLMs) have emerged, capable of comprehending and generating text in a much more nuanced, almost “human” way.

To accomplish their missions, AI agents often incorporate various complementary modules. One handles perception (or language understanding), another handles decision (or planning actions), and there are also modules for knowledge retrieval and memory. Add to that the ability to call upon external tools, and you get systems that can genuinely “act” autonomously in a given environment.

A Modular Architecture

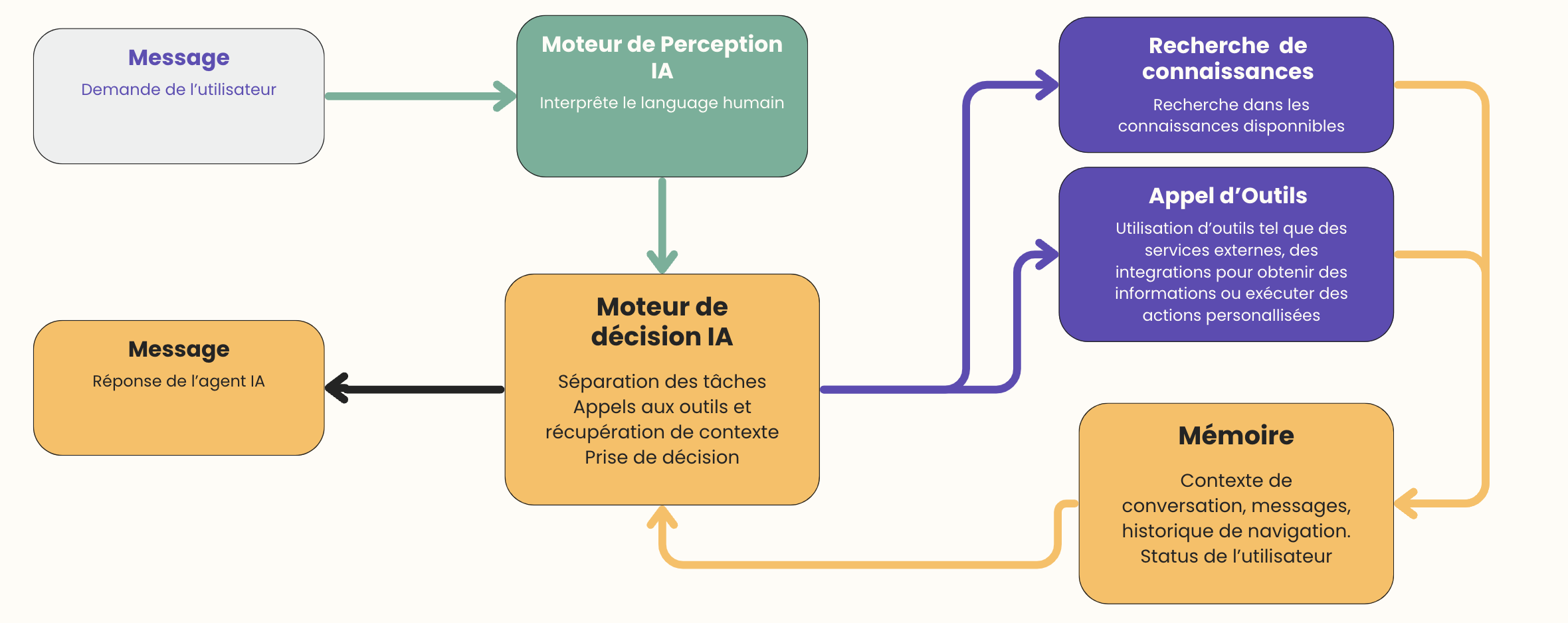

To explain the operational principle of an AI agent, we can visualize the flow of information as follows:

- Message (User’s request): The (human) user formulates a request or question.

- Perception Engine: The perception engine analyzes the sentence, identifies the intent, context, and key elements.

- Decision Engine: The decision engine plans the necessary steps, potentially searches for additional information, calls upon tools if needed, and prepares a response or action.

- Knowledge Base: A module for searching a site’s or a company’s knowledge base, or in an enriched chatbot (RAG, indexes, documents, etc.).

- Tool Call: Calls on an external tool to solve a problem, send an email, query an API, etc.

- Memory: The conversation’s history, user preferences, results from previous actions, etc.

- Message: The final answer sent back to the user.

Each block thus has its role to play and can be implemented separately. This modularity is crucial, as it allows for the independent improvement or replacement of each component in order to adapt to technological developments and the specific needs of each company or project.

The Perception Engine: Understanding Human Language

The first essential building block for an AI agent is its ability to understand what the user expresses. This is the role of the perception engine. Where a traditional chatbot might have relied on a decision tree (with fixed keywords), a current perception engine is often based on an LLM or on advanced NLP algorithms.

How Does It Work?

- Semantic analysis: The engine identifies the overall structure and meaning of the sentence.

- Entity extraction: It extracts key elements (dates, locations, product names, etc.).

- Intent detection: It attempts to discern the purpose of the request (e.g., “place an order,” “ask for help,” “get information,” etc.).

Thanks to LLMs, these steps are becoming more and more accurate, even in complex use cases or when the user does not express themselves very clearly. Additionally, some perception engines are referred to as multimodal: they can handle not only text but also images, videos, or even audio files.

The Perception Engine’s Limits

Despite considerable advances, language understanding is never perfect. Current models can be misled by ambiguous phrasing or tricked by unusual contexts. That is why a good AI agent should be able to verify its understanding by asking clarification questions or by turning to knowledge bases to bolster its initial interpretation.

The Decision Engine: Orchestrating the Response and Actions

Once the request has been understood, someone has to decide what to do. This is the role of the Decision Engine. You can think of it as a conductor who receives the score (the user’s request, already processed by the Perception Engine) and must then:

- Break the task down into simpler steps (often referred to as “chain-of-thought” in AI terminology).

- Determine whether additional information needs to be obtained from databases, documents, FAQs, etc.

- Decide whether a tool (API, external service, hardware action, etc.) needs to be called to accomplish the request.

- Assemble the final answer or outcome (plan the sequence of steps, formulate the response, etc.).

The Decision Engine often relies on a LLM as well (or a dedicated logic engine) for more refined reasoning. It is not uncommon to see hybrid systems: one LLM for language understanding, another LLM for planning and logic, possibly coupled with coded business rules.

Example: If a customer sends a message: “I’d like to change my order number 12345; how do I do that?”, the Decision Engine processes this information as a request to modify an order. It will then:

- Check whether an order management tool is available,

- Figure out the steps needed to retrieve the order,

- Verify the order’s status (already shipped or not),

- Generate a personalized response,

- Possibly launch the modification process via the relevant API.

Hence, the Decision Engine acts as an operational brain, ensuring consistency between the detected intentions and the actual tasks performed, using the appropriate components.

Knowledge Base: Searching for Information

Central to many AI agents is the capacity to look up external knowledge. This functionality is often crucial because, although an LLM may have memorized enormous amounts of information, it may sometimes lack precision or not have the latest version of an internal database.

The Knowledge Base can take various forms:

- Searching a document base (e.g., a collection of PDFs, manuals, FAQs, internal documents).

- Searching a vector-based index (often called RAG—Retrieval Augmented Generation), where you look within semantic embeddings for the most relevant passage to answer the query.

- Searching via a conventional search engine (Google, Bing, etc. API).

- Consulting internal databases (CRM, ERP, etc.).

In the example of an AI agent for order management, the Knowledge Base might simply involve querying the internal system to find order #12345 and check its status (paid, pending, shipped, etc.).

The advantage of this module is to avoid providing incomplete or inaccurate answers solely based on the LLM’s “general knowledge.” You thus move towards documented reasoning, where the agent (internally) justifies its response with reliable and up-to-date sources.

Tool Call: When AI Acts on the World

Answering questions is good, but acting to solve a problem is even better. That is the difference between a passive chatbot and an AI agent that can take on concrete actions.

The Tool Call refers to calling an external tool or service to carry out an operation such as:

- Sending an email,

- Placing an order,

- Updating a customer file,

- Running a script,

- Modifying a calendar, etc.

Thanks to this capability, an AI agent can go beyond mere discussion and directly solve the issue at hand. For example:

- When a user asks, “Can you call my supplier to push back the delivery date?”, the AI agent can use a telephony or email API to contact that supplier.

- When a customer wants to “Obtain a refund for product X,” the AI agent can initiate the refund procedure with the relevant payment or logistics service.

Essentially, the Tool Call gives the AI agent a degree of “action power” within the digital environment. Of course, that requires security and controls to be in place to prevent abuse or malicious actions. Access to tools must be regulated and traceable.

Memory: Keeping Track of History and Preferences

Another pillar of an AI agent’s effectiveness is its memory. This memory can manifest in various ways:

- Conversation history: The agent remembers previous exchanges with the user, enabling it to reply coherently in a longer context.

- Results of tool calls: If the agent has carried out a search or performed an action, it can store the result for later reference.

- User preferences or profiles: The agent can remember a customer’s tastes, needs, or specific traits to personalize its approach in subsequent interactions.

This memory is essential for providing an “intelligent” user experience. A chatbot without memory would tend to forget what was just said, leading to repetition or repeated questions. On the other hand, an AI agent with robust memory can build trust by maintaining a coherent conversation history and avoiding repeatedly asking the same questions.

From Simple FAQ to AI Agent: A Revolution Underway

For a long time, chatbots amounted to little more than dynamic FAQs: a list of fixed questions and answers, basic scripts, and limited personalization. The emergence of LLMs changed the game by enabling:

- A much more nuanced understanding of language: Users can speak naturally, and the AI agent can (often) understand them and rephrase their intent if necessary.

- Richer, more contextual text generation: The AI agent can explain, argue, tell stories, etc., in a flowing, relevant style.

- Adaptability and learning: Thanks to data and accumulated memory, the agent can improve its answers or adjust its actions.

However, the real revolution is not just replacing a simple chatbot with a “super-chatbot.” The crucial shift is that the AI agent can, via decision modules and tool calls, directly intervene in a digital environment. It can orchestrate operations, interact with information systems, and thus deliver a complete and proactive level of customer support or assistance.

Concrete Use Cases

1. Customer Service and After-Sales Support

In this area, an AI agent can:

- Understand a user’s complaint regarding a defective product,

- Verify the warranty and billing information in the database,

- Initiate a product return (Tool Call) by creating a logistics ticket,

- Update the customer file by logging the claim,

- Inform the user of the procedure to follow (or even send them a confirmation email).

The result: a 24/7 customer service, offering a uniform and speedy experience, and freeing up time for human agents, who can focus on the more complex cases.

2. Sales and Marketing Assistant

Picture an AI assistant capable of:

- Understanding the customer’s exact need (a specific product, a promotional offer, etc.),

- Checking the product catalog and availability,

- Suggesting an alternative product if the first choice is unavailable,

- Launching the order or preparing a quote,

- Sending a confirmation email with a summary.

This AI agent functions as a virtual super-salesperson, guiding the customer through their buying journey—from initial information gathering to final transaction.

3. Advanced Technical Support

An AI agent can:

- Query internal knowledge bases (technical guides, manuals, FAQs) to find the most appropriate solution,

- Ask targeted questions to the user to better understand the nature of the problem,

- Suggest troubleshooting steps (and possibly run a remote diagnostic tool),

- Update the support ticket and keep the customer informed of its progress.

This scenario is particularly useful in the IT field or high-tech after-sales service, where question complexity calls for deep problem understanding and the ability to find the right technical information.

4. Automating Administrative Tasks

An AI agent might:

- Automatically fill out administrative forms,

- Extract data from documents (invoices, contracts, etc.),

- Update records in an HR or accounting program,

- Schedule appointments (Tool Call to a shared calendar),

- Send reminders or notifications.

This automation significantly reduces the burden of repetitive tasks for teams, enabling them to concentrate on higher-value missions.

Challenges and Considerations

Although the promise of AI agents is compelling, several challenges remain:

- Quality of the perception model: Even the best LLMs can make mistakes, invent answers, or misunderstand a query.

- Maintaining coherence over time: The longer the conversation, the more the agent has to manage a large context and avoid inconsistencies.

- Ethical and security issues: Giving an AI agent the ability to act means potentially granting it access to sensitive data or critical features (payments, official emails, etc.). Hence the need for safeguards.

- Infrastructure dependence: The reliability of the AI agent depends on the robustness of hosting and the quality of third-party APIs used.

To address these challenges, organizations often implement hybrid solutions where the AI agent works up to a certain threshold and then hands off sensitive actions to a human operator for approval. You can also log all requests and answers to conduct audits if a problem arises.

Why Invest in an AI Agent?

Despite potential constraints and risks, more and more companies are choosing to develop or integrate an AI agent. Here are some major advantages:

- Improved customer experience: An AI agent can be available 24/7, respond quickly and consistently, and personalize its replies using the user’s memory.

- Cost optimization: By automating certain tasks, you reduce the workload for your teams and gain in productivity.

- Time savings: An AI agent can handle a high volume of requests in parallel, without tiring, while handing off complex cases to humans.

- Innovation and differentiation: An intelligent customer service can serve as a strong marketing argument.

- Better data collection: The AI agent can record conversation histories and extract useful statistics (question trends, satisfaction rates, etc.).

Key Principles for Implementing an Effective AI Agent

- Define the scope and objectives: Which tasks must the agent handle? Which actions should it be able to perform? How much autonomy will it have?

- Choose or train the models: Use existing LLMs (provided by major players) or train your own model on internal data.

- Incorporate the “decision” module: Establish the business logic, rules, and how the agent orchestrates various tool calls.

- Link to knowledge bases: Set up a solid Knowledge Base infrastructure—possibly via a vector index or an internal FAQ system.

- Secure and supervise: Manage tool access rights, and set up monitoring for the agent’s answers and actions.

- Consider user experience: Ensure the agent communicates fluently and politely, and can ask clarifying questions when in doubt.

The Role of Omnichannel Integration

An AI agent must also be where users are located. This means it should be able to integrate into:

- A website (in the form of a widget or chatbot),

- Messaging platforms (Messenger, Instagram, WhatsApp, Discord, Slack, etc.),

- A private client space (intranets, extranets),

- Business software (CRM, ERP, helpdesk).

Thanks to these multiple integrations, the AI agent becomes a single point of contact, delivering consistency and continuity in customer relations, no matter which channel is used. This is known as an omnichannel approach, which streamlines the user journey and boosts overall satisfaction.

Examples of Typical Interactions

To illustrate, let’s consider a hypothetical scenario where a user contacts the AI agent via an e-commerce website:

- User: “Hello, I got an email inviting me to try out your new service, but I don’t understand how it works.”

- AI Agent: (Perception) Understands that it’s a question about a specific service. (Decision) Checks whether internal documentation is available. (Knowledge Base) Finds an article explaining how it works. (Responds) “Hello, I see you have questions about our new service. Here are the steps…”

- User: “Okay, but where can I set my preferences?”

- AI Agent: (Decision) Identifies the need to update a profile. (Tool Call) Offers a link or executes a script to open the preferences page. “You can update your preferences at this link. Would you like me to redirect you there directly?”

- User: “Yes, thank you.”

- AI Agent: (Memory) Retains the fact that the user updated their preferences, which will be useful later for personalized recommendations.

In this exchange, we can see the interaction among perception, decision, knowledge retrieval, and tool calls, all archived in the agent’s memory for future reference.

Future Prospects

AI agents are set to evolve further, thanks notably to:

- Improved LLMs: More accurate models with greater contextual understanding and more advanced reasoning.

- Greater multimodality: Letting the AI agent process images, video, audio, and not just text.

- Continuous learning: Allowing the agent to learn in real time from new data or interactions.

- Personalization: Making use of detailed user profiles, combined with comprehensive histories, to deliver a hyper-personalized experience.

- Emergence of no-code platforms: Simplifying the design and deployment of AI agents, even for non-technical users.

In the near future, one can imagine each company having its own ecosystem of specialized AI agents: one for sales, another for technical support, a third for internal management, and so on. These agents could collaborate, sharing relevant information to streamline the customer experience and internal productivity.

Conclusion

AI agents represent a major advance in the field of applied artificial intelligence. Far more than a simple chatbot, an AI agent can understand user intent, make informed decisions, call on external tools, and continuously improve through contextual memory.

As technology evolves, the boundary between a virtual assistant, an automated advisor, and a digital collaborator is going to blur. Progress in NLP and LLMs already makes it possible to develop agents that handle a broad spectrum of use cases—from customer service to managing complex business processes.

With this in mind, it is crucial to design AI agents that are robust, secure, and able to integrate seamlessly into an existing environment. The challenges of governance, reliability, and ethics must not be underestimated, yet they do not negate the productivity gains, enhanced customer experience, and innovative capacity offered by these technologies.

For organizations and developers, this is a unique opportunity to stand out by offering intelligent solutions that truly address user needs from start to finish, harnessing all that modern AI has to offer. With the rise of no-code platforms and effortless integration across various channels, we can expect AI agents to quickly become a standard in customer relations and digital transformation for businesses.

All in all, successfully implementing an AI agent relies on a skillful blend of technology, business knowledge, integration strategy, and long-term vision. The possibilities are vast, and those who leverage them will be able to develop new services, new experiences, and new ways to interact with users, partners, and employees. The story is only just beginning, and there is no doubt that AI agents will continue to grow in maturity and sophistication—gradually redefining how we approach communication, collaboration, and automation in both our professional and personal daily lives.